Introduction

Artificial Intelligence

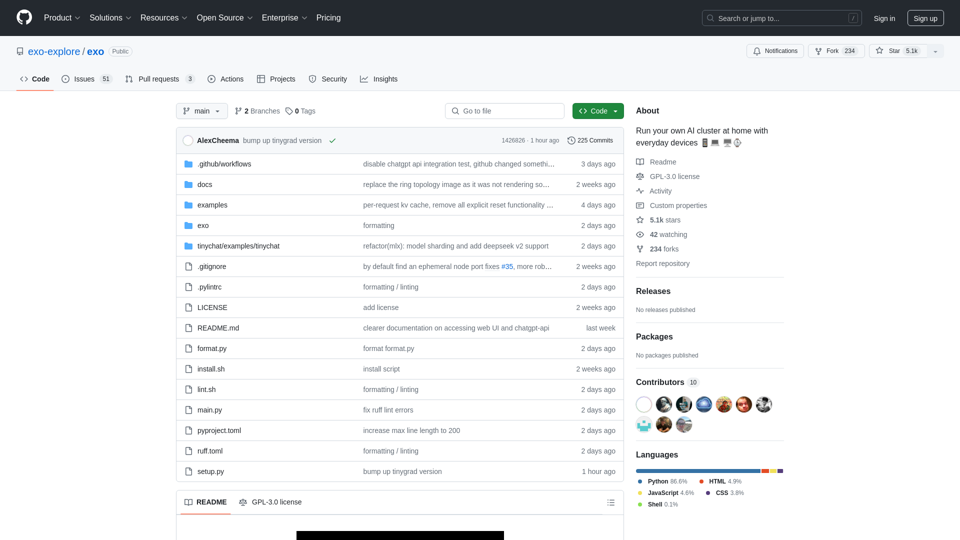

In recent years, Artificial Intelligence (AI) has become an integral part of our daily lives, from virtual assistants to self-driving cars. However, the complexity and computational requirements of AI models have limited their adoption to large-scale data centers and cloud services. What if you could run your own AI cluster at home using everyday devices? Exo, an open-source project, makes this a reality.

Hardware

The project involves using everyday devices, such as smartphones, laptops, and desktops, as part of the AI cluster. This approach not only reduces the cost of building and maintaining an AI infrastructure but also enables individuals to contribute their devices to a decentralized AI network.

Features

AI

Exo is all about AI and allows you to run AI models on your personal devices. This means you can experiment with AI projects without relying on cloud services or expensive hardware.

Distributed Inference

One of the key features of Exo is distributed inference, which enables you to run larger models than on a single device. This is achieved by splitting the model across multiple devices, allowing you to process complex AI tasks efficiently.

Automatic Device Discovery

Exo automatically discovers other devices available for distributed inference, making it easy to set up and manage your AI cluster.

ChatGPT-compatible API

Exo provides a ChatGPT-compatible API, allowing you to run models on your personal hardware. This API is compatible with popular AI frameworks, making it easy to integrate Exo with your existing AI projects.

Device Equality

Unlike traditional master-worker architectures, Exo treats all devices equally, ensuring that each device contributes to the AI cluster without any centralized control.

Inference Engines

Exo supports multiple inference engines, including MLX and tinygrad, giving you the flexibility to choose the best engine for your AI projects.

Networking Modules

Exo supports multiple networking modules, including GRPC, enabling efficient communication between devices in the AI cluster.

Open Source

Exo is an open-source project, which means that you can contribute to its development, report issues, and suggest new features. The open-source nature of Exo ensures that the project remains community-driven and transparent.