Introduction

Model Description

The ModelScope Text To Video Synthesis is a revolutionary AI model that generates videos from text inputs. This diffusion model consists of three sub-networks: text feature extraction, text feature-to-video latent space diffusion model, and video latent space to video visual space. With approximately 1.7 billion model parameters, it supports English input and adopts the Unet3D structure to realize video generation through an iterative denoising process from pure Gaussian noise videos.

Features

Model Limitations and Biases

While the ModelScope Text To Video Synthesis is a powerful tool, it's essential to understand its limitations and biases. The model is trained on public datasets such as Webvid, which may result in deviations related to the distribution of training data. It cannot achieve perfect film and television quality generation, and it's not capable of generating clear text. Additionally, the model is primarily trained on English corpus and does not support other languages at the moment. Its performance needs to be improved on complex compositional generation tasks.

Usage

How to Use

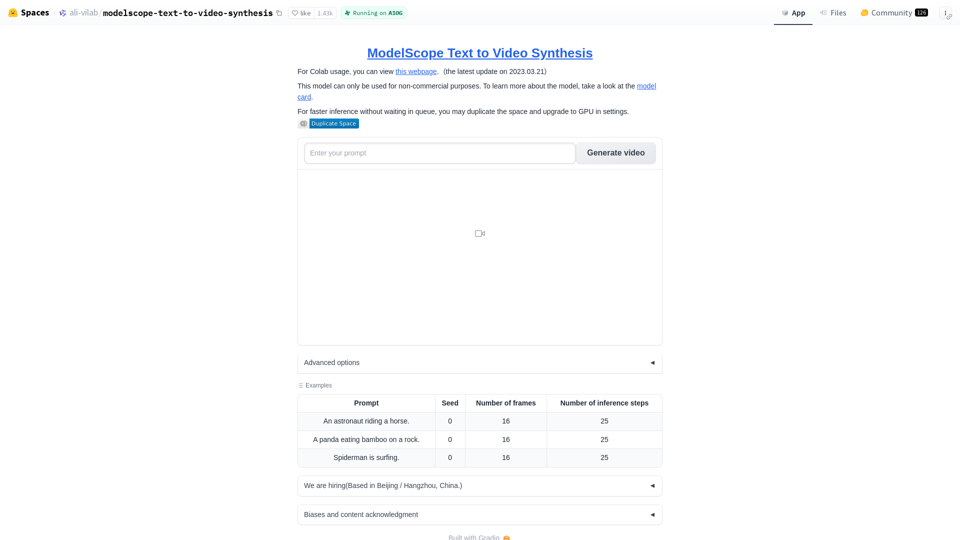

Experience the ModelScope Text To Video Synthesis directly on ModelScope Studio and Hugging Face or refer to the Colab page to build it yourself. To facilitate the experience, users can refer to the Aliyun Notebook Tutorial to quickly develop this Text-to-Video model. This demo requires about 16GB CPU RAM and 16GB GPU RAM. Under the ModelScope framework, the current model can be used by calling a simple Pipeline, where the input must be in dictionary format, with the legal key value being 'text', and the content being a short text. This model currently only supports inference on the GPU.

Operating Environment

The operating environment requires Python packages such as modelscope, open_clip_torch, and pytorch-lightning.

Benefits

Model Limitations and Biases

While the ModelScope Text To Video Synthesis has its limitations and biases, it's essential to understand them to utilize the model effectively. The model is trained on public datasets such as Webvid, which may result in deviations related to the distribution of training data. It cannot achieve perfect film and television quality generation, and it's not capable of generating clear text. Additionally, the model is primarily trained on English corpus and does not support other languages at the moment. Its performance needs to be improved on complex compositional generation tasks.

Conclusion

Training Data

The training data includes LAION5B, ImageNet, Webvid, and other public datasets. Image and video filtering is performed after pre-training, such as aesthetic score, watermark score, and deduplication.

Citation

The model should be cited using the provided citation information.

Spaces

The model is used in various spaces, including saifytechnologies/ai-text-to-video-generation-saify-technologies, ali-vilab/modelscope-text-to-video-synthesis, NeuralInternet/Text-to-video_Playground, Abidlabs/cinemascope, Libra7578/Image-to-video, Heathlia/modelscope-text-to-video-synthesis, masbejo99/modelscope-text-to-video-synthesis, zekewilliams/video, adamirus/VideoGEN, monkeybird420/modelscope-text-to-video-synthesis, and raoyang111/modelscope-text-to-video-synthesis.